- #Filebeats set document id for free#

- #Filebeats set document id update#

- #Filebeats set document id pro#

Or one may wish to use Elasticsearch and Kibana for analysing a dataset that is only available in a spreadsheet.

For example, one may need to get Master Data that is created in a spreadsheet into Elasticsearch where it could be used for enriching Elasticsearch documents. For various reasons it may be useful to import such data into Elasticsearch.

#Filebeats set document id for free#

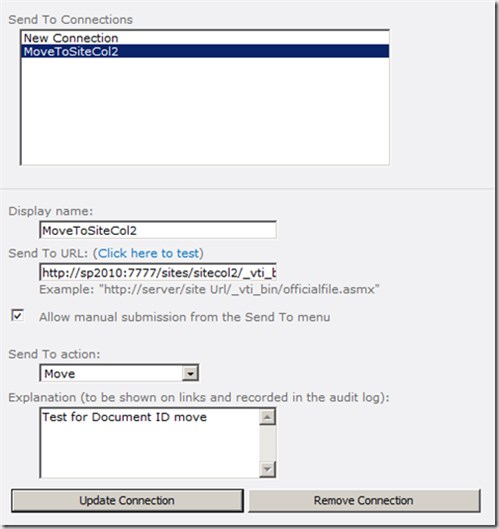

You can spin up a cluster for free at Elastic Cloud OR download the Elasticsearch and Kibana.Many organisations use excel files for creating and storing important data. You need an Elasticsearch cluster to run below commands. Cannot perform operations like clone, delete, close, freeze, shrink, split on a write index because these operations could hinder indexing.

#Filebeats set document id update#

Can update documents in older hidden indices only by update_by_query, delete_by_query. Cannot add new documents to other read-only indices. Every data stream should have a backing index template (I wrote about index templates here.) An index template that is in use by a data stream cannot be deleted. Every document indexed into the Data stream should have a field. End of the day, data is still stored in Elasticsearch indices. The difference is you always write to one index while keep querying on the entire hidden collection of indices.ĭifference between an Index and Data streamĪ Data stream still contains a collection of hidden auto-generated indices. It rolls over the index automatically based on the index lifecycle policy conditions that you have set.ĭata streams are like aliases with superpowers. Logs, metrics, traces are time-series data sources that generate in a streaming fashion.Įlasticsearch Data stream is a collection of hidden automatically generated indices that store the streaming logs, metrics, or traces data. 😅 For beginners looking to get started, they might feel scaling the Elasticsearch cluster data overwhelming with all these concepts. It might sound like everything I wrote in the previous paragraphs. You always refer to the latest one! What's the problem? Thereby, in time-series data indexing, you could always use the alias to query the latest data while indices keep rotating daily behind. Data, when ingested through Filebeat, Filebeat manages the index rotation.Įlasticsearch also has a concept called Aliases, which is a secondary name to an Elasticsearch index. If you are using Filebeat to ship logs, the index is rolled over to a new one daily or based on the size threshold by default. What folks do is time-based indexing, rolling over the data into a newer index based on time or size. This feature is called Searchable Snapshots. Note: You could store data on Cloud Object Stores like S3, GCS, ACS and directly query it from Elasticsearch in a faster manner. It could be for various reasons - active data engineering work, gathering customer behavior trends, insights, etc. Can I neglect and store fewer amounts of data?īut most teams store at least six months of data on Elasticsearch Cluster or even any on other platforms. Now multiply it with the number of days, it starts to look prominent. #Filebeats set document id pro#

Timeseries data is voluminous and snowballs quickly.įor example: For an average user, syslogs from MacBook pro might be anything from the range of 300mb-1Gb per day. I wrote at length about Elasticsearch Index, and please refer if you are new to the concept. Besides, with tools like Metricbeat and APM, Elasticsearch became home for metrics and traces too.Īll these different data categories are stored in a simple index that lets you search, correlate and take action. Elasticsearch, aka ELK stack, is the defacto home for devs doing log analytics for years.

0 kommentar(er)

0 kommentar(er)